Author: Jyotidip Barman

We are very much tied to our input devices for a long period of time, but there has been some cutting edge research in the field of gesture based technology. This gesture based computing is bringing in the reality of the science fiction books or movies taking us closer to the machines. When someone thinks about the futuristic Hollywood film ‘Minority Report’, the one scene that sticks to our mind in the science fiction film is that Tom Cruise standing in front of a transparent screen and using hand gestures for data manipulation of the data projected on the screen. This scene left many of us bewildered as to see that the entire process is being done with two illuminated finger tips without the help of any input devices. This is the future of the technological world that we are being currently moving forward to. Each year million of money is being spent in research to make input devices more ergonomic. Imagine the world without any input devices where bodily gestures will work as inputs and generate a response instead of input devices. This very idea has given birth to the much talked about Computing known as Gesture based Computing.

The iPhone has somehow revolutionized the way we interact with mobile phones now, ever since Apple has introduced the multi-touch gesture on a touch screen phone. We have seen a lot of improvements in this department with lots of companies releasing more touch phones now, than ever before. Apart from voice commands had we ever thought that of how navigation on your cell phone would be like without touching in any manner. A team of Dartmouth College has developed a technology that will not require you to use your hands to operate your phones basic functionalities. The Eye Phone, as the name implies uses eye tracking and eye actions such as winking to register inputs for the phone. This can work with any smart phone having a front facing camera. The system tracks the eye movement around the phones display using the phones front facing camera and detects the eye-blink to activate the target application.

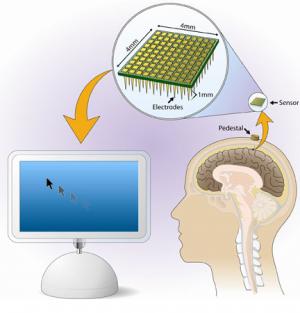

However Gesture based Computing will not completely replace the input devices like mouse, keyboard or touch-screens but will definitely ease up the way in which we interact with machines. Apart from hand gestures, brain controlled devices will become quite prominent in the near future. And even though the current projects include only one gestural interface controlling the device at a time, however in the very near future we will be seeing that various other gestural interfaces like hand gestures, eye tracking will be integrated in generating a response from the device. This will just bring us more closer to our machines or better will make machines more human which way you look at it.